Re-localization

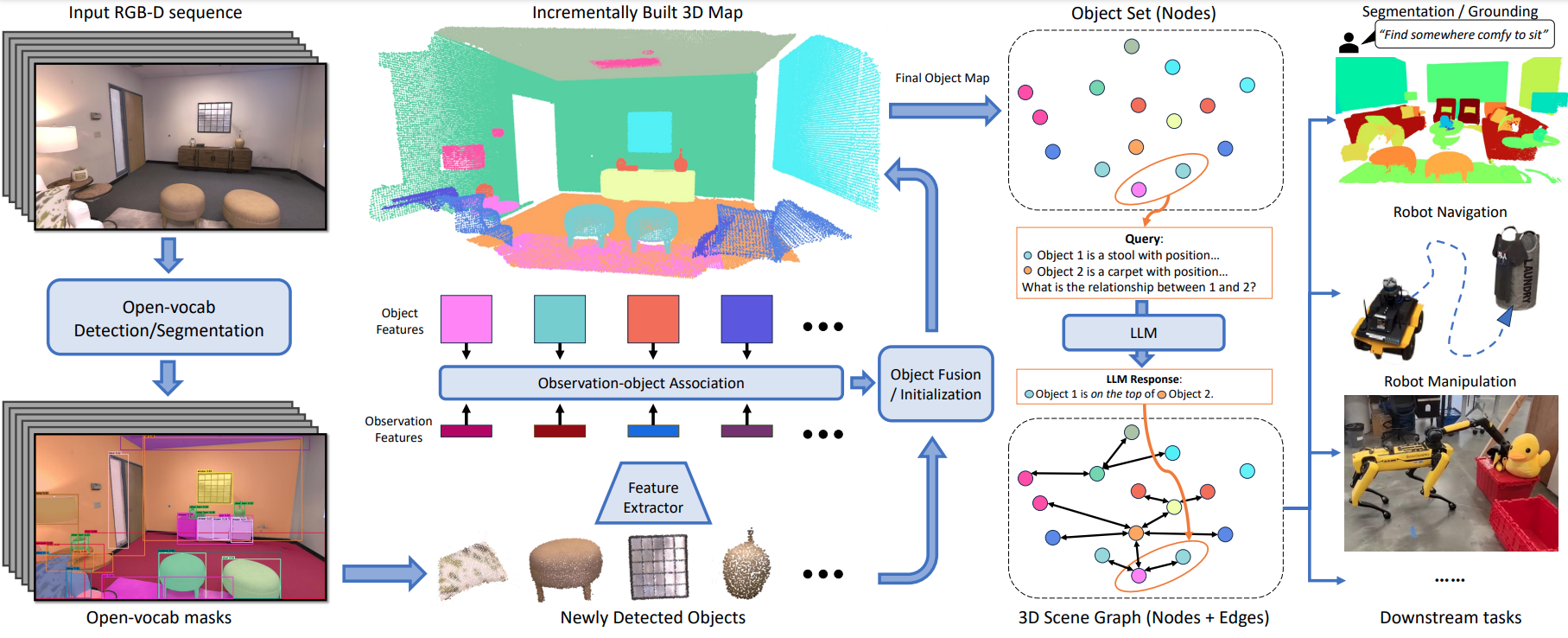

We emply ConceptGraphs to localize the robot in a previously mapped environment. Each object encodes a CLIP embedding, which is employed in a landmark-based particle-filter that uses cosine similarity to compute particle weights. Within a few iterations, the robot localizes accurately. In our explainer video, we also demonstrate mapping newly-detected objects that were not present in the original map.